Though its often presumed that faster (lower latency) is better, it is important to understand the different levels of latency, the effort to achieve each level and the cost associated.

Consider the scenario of a trader who is using a commercially available app (R | Trader Pro, SC, NT, MC, …) on his desktop and his desktop is located in the NYC area. The CME’s market data (market data from CBOT, CME, COMEX and NYMEX) originates from the CME’s data center located in Aurora, Il - about 30 miles west of Chicago. The rule of thumb for data traversing the internet is 1 millisecond per 100 miles. The New York Area is about 830 miles from Aurora so data will take at least 8.5 milliseconds to go from Aurora to NYC and the same time to go from NYC to Aurora. So to get an order to the CME based upon market data from the CME, if the decision to place the order took no time at all (figure an automated program made that decision), such an order would arrive at least 17 milliseconds after the market data was published by the CME. If that trader had his desktop in the Chicago area his order would have arrived at the CME about 17 milliseconds sooner.

Now look at the method of getting the market data and placing the order. In the example above the decision to place the order and the mechanism to place it was automated. Suppose it had been based upon the trader looking at the screen, i.e. the trader looks at a screen, sees data that indicates to him that it is a good time to place an order and clicks on that screen to place an order. How long does that take? Well try to blink your eyes 8 times within 1 second - doable but not so pleasant. if you can do it then you can say that the blink of an eye takes about 125 milliseconds. If you can react to data on a screen and click so that an order is submitted within the blink of an eye then such an order to be sent from your screen took about 125 milliseconds and will take another 8.5 milliseconds or so to get to Aurora. Moving the machine from the NYC area to the Chicago area would have limited benefit as the overwhelming time taken for the order to get to Aurora rested with the trader.

When an order is received by our infrastructure, it is checked to make sure that the user who placed the order is known, the user has permission to trade on the account identified in the order, the account has permission to trade the instrument identified in the order, the account has enough credit to place the order, etc. If none of the checks result in the order being rejected then the order is put to out our modules that handle the submission of the order to the exchange. The time it takes from when we get the order so that we can assign an order id to it to the time just after we submit it to the exchange varies, but generally takes between half a millisecond to 1 and a half milliseconds, sometimes more. This variance depends upon many factors including the complexity of the order and the activity of the system at the time the order is received. Now add to it the time taken for the market data to get from our system to the app and the time taken for the user or app making the decision to send an order to our infrastructure.

Orders from R | Trader, R | Trader Pro, all third party screens and all programs written by traders that incorporate R | API+ experience these processing and transit times. With R | Diamond API the total time, from when we get the market data to the time just after an order is submitted to the exchange (such order based upon the market data and submitted automatically by a program) is generally less than 250 microseconds (1 quarter of a millisecond). It is usually much less than 250 microseconds, but we only admit publicly to 250 microseconds and of course, the time taken by the program to get the market data and decide to send an order, which is written by the trader, may affect this 250 microseconds time. So why doesn’t everyone use R | Diamond API?

Just like using a machine that is close to the exchange may not noticeably affect the latency of an order when the decision to place the order rests with a trader (instead of with a program), the use of R | Diamond API may not provide a trader with a higher P&L. First, is the algorithm likely to generate a positive P&L? Second, is the performance of the trading algorithm sensitive to latency or a reduction in latency? Third, can the trading algorithm gets its market data and submit an order back to our infrastructure in a few microseconds? Fourth, can the cost of using R | Diamond API be offset consistently by the profits of the trading algorithm?

Unfortunately most traders cannot answer these questions until they run their programs that incorporate R | Diamond API. Consequently we insist that the trader first incorporate R | API+ into his program, run it, make observations about latency and transit times and P&L. Then, if through observation and an objective analysis of the data, it looks like the program would benefit from a reduction in latency, the trader should port his app to incorporate R | Diamond API (which is a very small effort) and use it for live trading.

Some costs associated with using programs that incorporate R | Diamond API (Diamond Programs):

- R | Diamond API has been designed for use with programs that have been developed using C++ on Linux. Programs developed in other languages or run on other operating systems may not benefit from R | Diamond API.

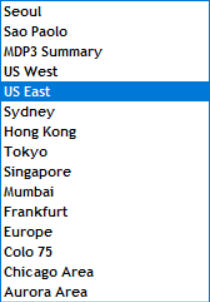

- Diamond Programs must run on machines located in Rithmic’s Aurora data center configured and tuned by Rithmic to Rithmic’s specifications. These machines are generally rented from our affiliate, TheOmne.net, LLC and there is a cost for renting these machines (see Dedicated Servers).

- Diamond Programs must be launched as specified by Rithmic.

- The FCM/broker may charge fees for using R | Diamond API different from the fees it regularly charges to use Rithmic’s software and the FCM/broker may charge fees for the use of a private session with the exchange.

I realize that this answer is longer than may have been expected but I thought it would be good to provide a comprehensive answer.

jjw